Human preferences often naturally decompose into rewards and constraints. For example, imagine you have an autonomous car and tell it to drive you to the grocery store. This task has a goal that is naturally described by a reward function, such as the distance to the grocery store. However, other implicit parts of the task, such as following driving rules or making sure the drive is comfortable, can be naturally described as constraints.

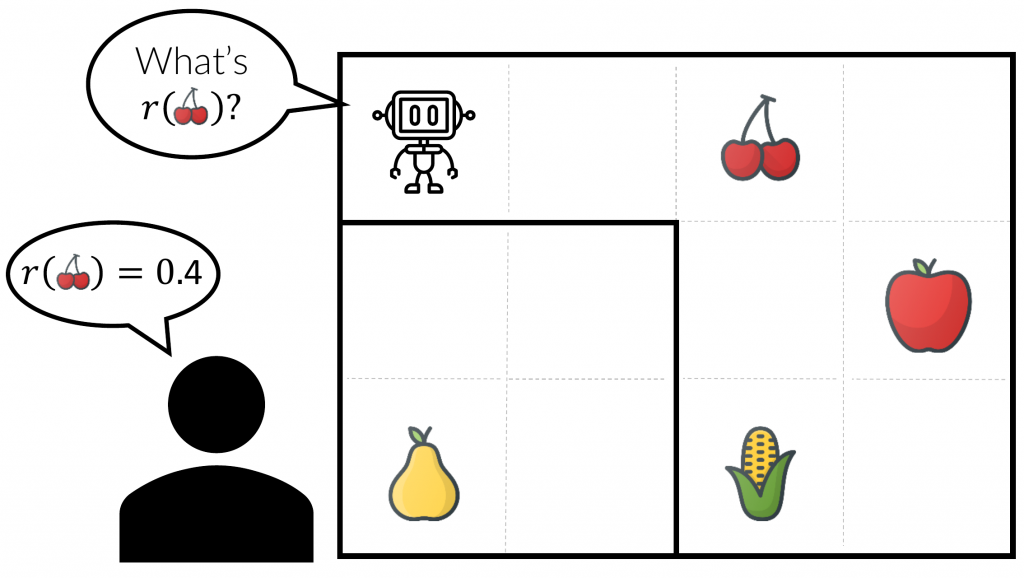

A key challenge for deploying AI systems in the real world is to ensure that they act “in accordance with their users’ intentions’’ – that they do what we want them to do. The most common way of communicating to an AI system what we want it to do is through designing a reward (or cost) function. However, in practice, it is challenging to specify good reward functions, and misspecified reward functions can lead to all kinds of undesired behavior.… Read more