Learning as an Iterative Process

One key challenge that resonates in numerous learning tasks, is the process of deciding what to learn next. For example, imagine you are trying to learn a new hobby. One way to learn your hobby quickly, would be to keep track of a reading list of books that will allow you to learn from experts. As you keep reading these books from your reading list, you’ll need to decide which of them is worth investing time to cover all the learning material. For example, if your goal is learning chess, there’s no need to repeat a beginner’s book twice, when you can already progress to learning new opening tactics.

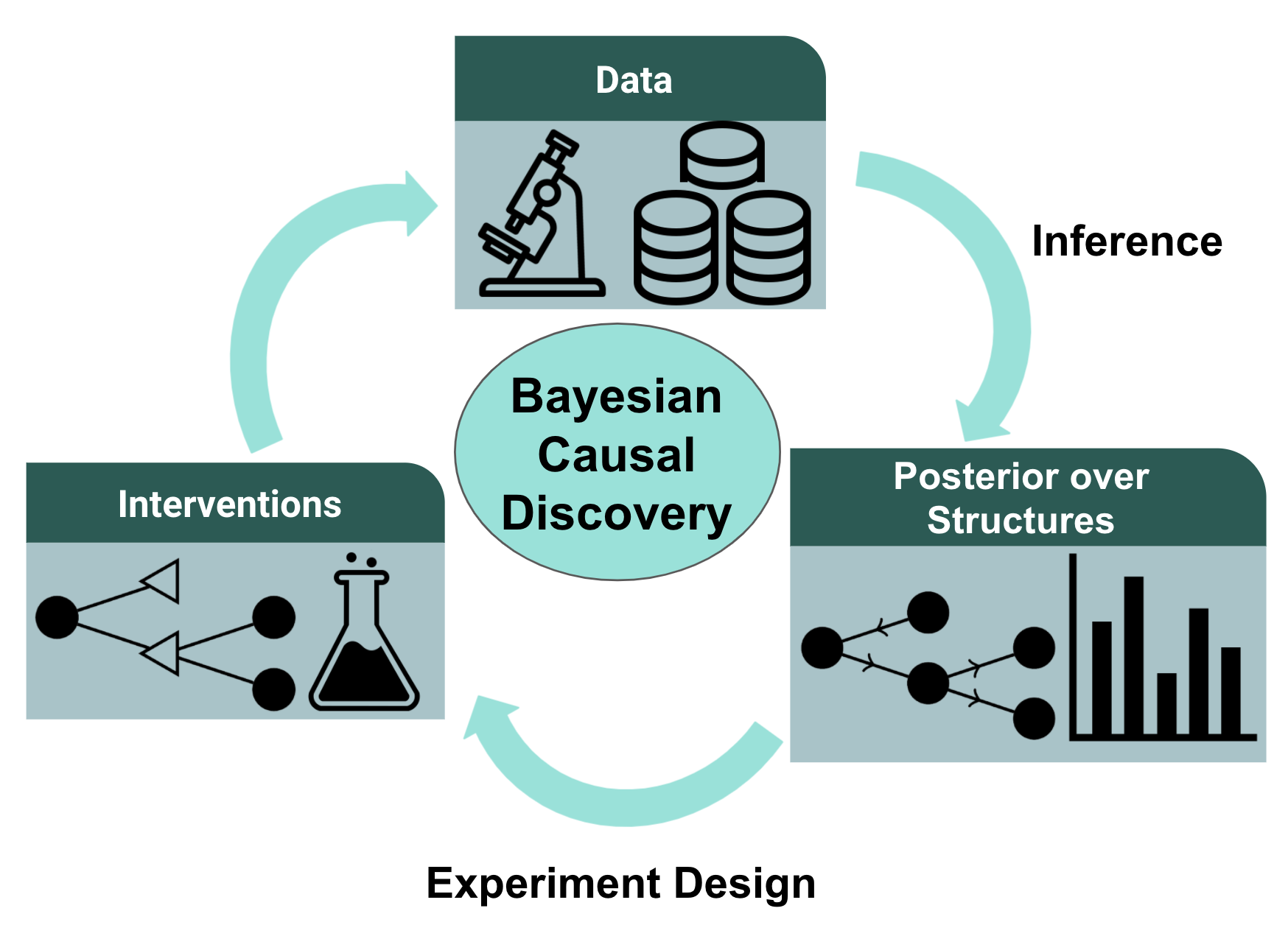

How can you intelligently choose the next book? Active learning algorithms can methodologically guide you in your learning endeavor.

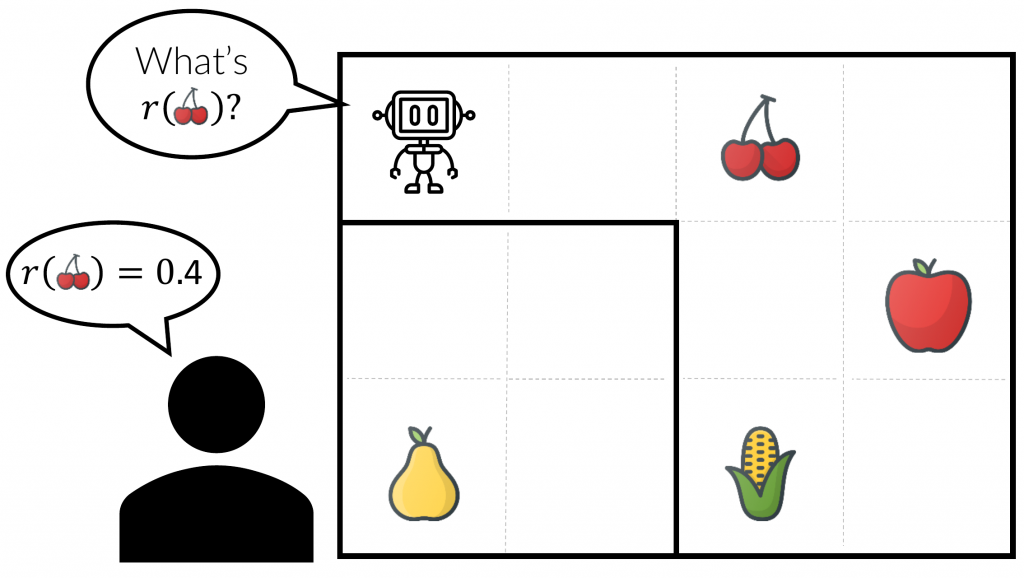

Let’s take our chess learning problem and model it as an Active Learning problem. Suppose that each book can be represented as a set of features that uniquely identify it.… Read more